Associated URLs:

oioiiooixiii

FFmpeg: RGB affected luma cycle

$ rgbLumaBlendCycle_FFmpeg.sh 'image1.jpg' 'screen'

$ rgbLumaBlendCycle_FFmpeg.sh 'image2.jpg' 'difference'

$ rgbLumaBlendCycle_FFmpeg.sh 'image3.jpg' 'pinlight' 'REV'

#!/usr/bin/env bash

# FFmpeg ver. 4.2.2+

# RGB affected luma cycle: Each colour plane is extracted and blended with the

# original image to adjust overall image brightness. The result of each blend

# is faded into the next, before belnding back to the original image.

# Parameters:

# $1 : Filename

# $2 : Blend type (e.g. average, screen, difference, pinlight, etc.)

# $3 : Reverse blend order (any string to enable)

# version: 2020.07.15_12.28.31

# source: https://oioiiooixiii.blogspot.com

function main()

{

local mode="$2"

local name="$1"

local layerArr=('[a][colour1]' '[b][colour2]' '[c][colour3]'

'[colour1][a]' '[colour2][b]' '[colour3][c]')

local layerIndex="${3:+3}" && layerIndex="${layerIndex:-0}"

# Array contains values for both blend orders; index is offset if $3 is set

ffmpeg \

-i "$name" \

-filter_complex "

format=rgba,loop=loop=24:size=1:start=0,

split=8 [rL][gL][bL][colour1][colour2][colour3][o][o1];

[rL]extractplanes=r,format=rgba[a];

[gL]extractplanes=g,format=rgba[b];

[bL]extractplanes=b,format=rgba[c];

${layerArr[layerIndex++]}blend=all_mode=${mode}[a];

${layerArr[layerIndex++]}blend=all_mode=${mode}[b];

${layerArr[layerIndex]}blend=all_mode=${mode}[c];

[o][a]xfade=transition=fade:duration=0.50:offset=0,format=rgba[a];

[a][b]xfade=transition=fade:duration=0.50:offset=0.51,format=rgba[b];

[b][c]xfade=transition=fade:duration=0.50:offset=1.02,format=rgba[c];

[c][o1]xfade=transition=fade:duration=0.50:offset=1.53,format=rgba

" \

${name}-${mode}.mkv

}

main "$@"

download: rgbLumaBlendCycle_FFmpeg.sh

Image Credits:

Henry Huey - "Alice in Wonderland - MAD Productions 5Sep2018 hhj_6811"

Attribution-NonCommercial 2.0 Generic (CC BY-NC 2.0)

https://www.flickr.com/photos/henry_huey/43768692515/

Henry Huey - "Alice in Wonderland - MAD Productions 5Sep2018 hhj_6869"

Attribution-NonCommercial 2.0 Generic (CC BY-NC 2.0)

https://www.flickr.com/photos/henry_huey/29740096427/

Henry Huey - "Alice in Wonderland - MAD Productions 5Sep2018 hhj_6848"

Attribution-NonCommercial 2.0 Generic (CC BY-NC 2.0)

https://www.flickr.com/photos/henry_huey/29740096117/

FFmpeg: Improved 'Rainbow-Trail' effect

* Includes sound 🔊

I have updated the script for this FFmpeg 'rainbow' effect I created in 2017¹ as there were numerous flaws, errors, and inadequacies in that earlier version. One major issue was the inability to colorkey with any colour other than black; this has been resolved.

This time, the effect is based on the 'extractplanes' filter and the alpha levels created after using a 'colorkey' filter. This produces a much more refined result; better colour shaping, and maintains most of the original foreground subject. This 'extractplanes' filter can even be removed from the filtergraph, to create an alternative, more subtle effect.

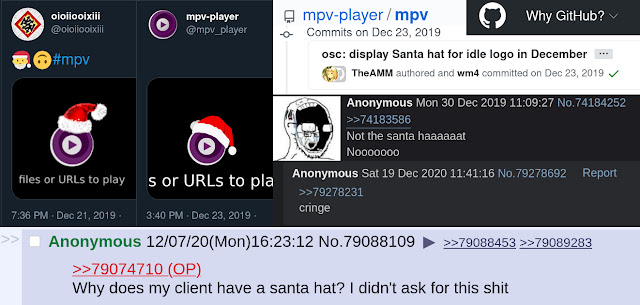

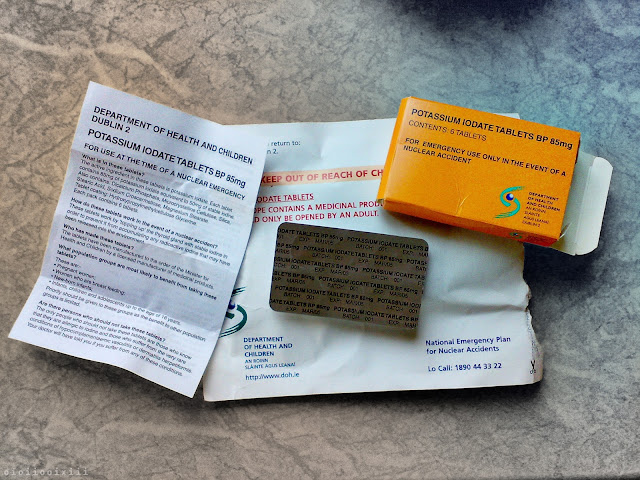

Irish State-Issued Iodine Tablets: 'For emergency use only in the event of a nuclear accident'

September, 2001: Soon after the "9/11" terrorist attacks in America, a sudden spike in public concern regarding the possibility of an accident, or terrorist attack, at the Sellafield nuclear reprocessing plant causes the Irish government to quickly draw up plans to issue iodine tablets to every household in Ireland.¹

At the time, Ireland was the only European country to take such a measure and it was a source of both national and international ridicule,² even though, some other countries had concerns over the safety of the nuclear facility also.³ Whatever the validity or nativity of such a plan, just like with the 'Millennium Candles' that were set to be delivered to celebrate the year 2000, many households failed to even get delivery of the medicine.

Issued in June 2002,⁴ the original tablets had an expiry date of 2005 and plans to reissue new quantities in 2008 were scrapped.⁵ In 2015, the idea of reissuing iodine tablets was once again quashed when the RPII (Radiological Protection Institute of Ireland) found that the consumption of potassium iodate tablets in Ireland "would not be justified".⁶

From the original 2001 Irish health department press release: "In most nuclear accident situations staying indoors and avoiding consumption of certain foods that may be contaminated would be the advice given."⁷

tags:

history

,

Ireland

,

photography

,

politics

,

terrorism

China: Spread the Doom

It will be a silent spring.

What I've learned about the 'Wuhan Flu' from Western experts so far:

SARS-CoV-2 was developed by the Chinese to wipe out Taiwan. It was developed by stealing it from America via Canada, using a Dutch boat travelling through the middle-east.

It was was engineered further with extra HIV bits and given to a bat. This bat flew out of the lab accidentally on purpose, and ended up in a soup eaten in Palau 3 years ago (time-travel is one of the side effects).

China is covering it up by being completely open about it, and shutting down entire cities and tanking its economy. All the numbers are fake though. Real victims are running at about 100 million. Which basically means the world is coming to an end. China released it now to keep the population down and/or part of Bill Gates globalist vaccine plan.

China doesn't care about controlling the virus, that's why they've taken all these measures, once again, tanking their economy. This is all perfectly fine though because this entire scheme all part of a 7D chess to silence the Uyghur protesters in Hong Kong, and in doing so, own the Yanks.

The WHO has been paid off to help remove the second amendment from the American constitution.

I assume China will wait until they finish boring their tunnel under America¹ before unleashing the full terror!

¹ https://www.youtube.com/watch?v=0dVu2KLtEYA

context: https://en.wikipedia.org/wiki/2019_novel_coronavirus

title reference: https://drive.google.com/open?id=1MSYkzFKuyVp1-oCWcENa6Lq_UEBMxIf8

related: "It will be a silent spring."

further info: https://cnc.fandom.com/wiki/Desolator_(Red_Alert_2)

public domain images used: https://commons.wikimedia.org/wiki/File:Tiananmen_beijing_Panorama.jpg

Highlander: Coomer MacLeod

Here is an alternate 'Directors Cut', with audio from the film also included.

[Here I will explain the joke, for the purposes of posterity, and SEO. It might be best to skip it.]

An old, if not dead, meme at this stage, but one I wanted to do for some weeks: Conor MacLeod as 'Coomer MacLeod'. It ties in with the even older joke about the sexual nature of the 'Quickening' experience in the Highlander movie franchise; an orgasmic rush that envelopes a person after they defeat their enemy and decapitating them. 'Real smooth shave!'

context: https://knowyourmeme.com/memes/coomer

context: https://en.wikipedia.org/wiki/Highlander_(film)

All video editing done with 'Flowblade' version 2.2 (GPL-3.0-or-later): http://https://github.com/jliljebl/flowblade

🌱🐸☘️🐌🌱 (~1cm) #garden ¹

'The moist moss makes mellow; both grass, and fellow.'²

Update: 2019.09.17 (~2.5cm)

¹ originally published: August 17, 2019 https://twitter.com/oioiiooixiii/status/1162634207274967040

² originally published: August 17, 2019 https://twitter.com/oioiiooixiii/status/1162640769158733824

tags:

animals

,

photography

,

Twitter

Degrading jpeg images with repeated rotation - via Bash (FFmpeg and ImageMagick)

A continuation of the decade-old topic of degrading jpeg images by repeated rotation and saving. This post briefly demonstrates the process using FFmpeg and ImageMagick in a Bash script. Previously, a Python script achieving similar results was published, which has recently been updated. There are many posts on this subject and they can all be accessed by searching for 'jpeg rotation' tag.

posts: https://oioiiooixiii.blogspot.com/search/label/jpeg%20rotationThe two basic commands are below. Both versions rotate an image 90 degrees clockwise, and each overwrite the original image. They should be run inside a loop to create progressively more degraded images.

ImageMagick: The quicker of the two, it uses the standard 'libjpg' library for saving images.

mogrify -rotate "90" -quality "74" "image.jpg"

FFmpeg: Saving is done with the 'mjpeg' encoder, creating significantly different results.

ffmpeg -i "image.jpg" -vf "transpose=1" -q:v 12 "image.jpg" -y

There are many options and ways to extend each of the basic commands. For FFmpeg, one such way is to use the 'noise' filter to help create entropy in the image while running. It also has the effect of discouraging the gradual magenta-shift caused by the mjpeg encoder.

A functional (but basic) Bash script is presented later in this blog post. It allows for the choice between ImageMagick or FFmpeg versions, as well as allowing some other parameters to be set. Directly below is another montage of images created using the script. Run-time parameters for each result are given at the end of this post.

Running the script without any arguments (except for the image file name) will invoke ImageMagick's 'mogrify' command, rotating the image 500 times, and saving at a jpeg quality of '74'. Note that when the FFmpeg version is running, the jpeg quality is crudely inverted, to use the 'q:v' value of the 'mjpeg' encoder.

The parameters for the script: [filename: string] [rotations: 1-n] [quality: 1-100] [frames: (any string)] [version: (any string for FFmpeg)] [noise: 1-100]

#!/bin/bash

# Simple Bash script to degrade a jpeg image by repeated rotations and saves,

# using either FFmpeg or ImageMagick. N.B. Starting image must be a jpeg.

# Example: rotateDegrade.sh "image.jpg" "1200" "67" "no" "FFmpeg" "21"

# Run on image.jpg, 1200 rotations, quality=67, no frames, use FFmpeg, noise=21

# source: oioiiooixiii.blogspot.com

# version: 2019.08.22_13.57.37

# All relevent code resides in this function

function rotateDegrade()

{

local rotations="${2:-500}" # number of rotations

local quality="${3:-74}" # Jpeg save quality (note inverse value for FFmpeg)

local saveInterim="${4:-no}" # To save every full rotation as a new frame

local version="${5:-IM}" # Choice of function (any other string for FFmpeg)

local ffNoise="${6:-0}" # FFmpeg noise filter

# Name of new file created to work on

local workingFile="${1}_r${rotations}-q${quality}-${version}-n${ffNoise}.jpg"

cp "$1" "$workingFile" # make a copy of the input file to work on

# N.B. consider moving above file to volatile memory e.g. /dev/shm

# ImageMagick and FFmpeg sub-functions

function rotateImageMagick() {

mogrify -rotate "90" -quality "$quality" "$workingFile"; }

function rotateFFmpeg() {

ffmpeg -i "$workingFile" -vf "format=rgb24,transpose=1,

noise=alls=${ffNoise}:allf=u,format=rgb24" -q:v "$((100-quality))"\

"$workingFile" -y -loglevel panic &>/dev/null; }

# Main loop for repeated rotations and saves

for (( i=0;i<"$rotations";i++ ))

{

# Save each full rotation as a new frame (if enabled)

[[ "$saveInterim" != "no" ]] && [[ "$(( 10#$i%4 ))" -lt 1 ]] \

&& cp "$workingFile" "$(printf %07d $((i/4)))_$workingFile"

# Rotate by 90 degrees and save, using whichever function chosen

[[ "$version" == "IM" ]] \

&& rotateImageMagick \

|| rotateFFmpeg

# Display progress

displayRotation "$i" "$rotations"

}

}

# Simple textual feedback of progress shown in terminal

function displayRotation() { clear;

case "$(( 10#$1%4 ))" in

3) printf "Total: $2 / Processing: $1 🡄 ";;

2) printf "Total: $2 / Processing: $1 🡇 ";;

1) printf "Total: $2 / Processing: $1 🡆 ";;

0) printf "Total: $2 / Processing: $1 🡅 ";;

esac

}

# Driver function

function main { rotateDegrade "$@"; echo; }; main "$@"download: rotateDegrade.sh python version: https://oioiiooixiii.blogspot.com/2014/08/jpeg-destruction-via-repeated-rotate.html

original image: https://www.flickr.com/photos/flowizm/19148678846/ (CC BY-NC-SA 2.0)

parameters for top image, left to right:

original | rotations=300,quality=52,version=IM | rotations=200,quality=91,version=FFmpeg,noise=7

parameters for bottom image, left to right:

rotations=208,quality=91,version=FFmpeg,noise=7 | rotations=300,quality=52,version=FFmpeg,noise=0 | rotations=500,quality=74,version=IM | rotations=1000,quality=94,version=FFmpeg,noise=7 | rotations=300,quality=94,version=FFmpeg,noise=16

tags:

BASH

,

FFmpeg

,

glitch

,

image destruction

,

ImageMagick

,

jpeg rotation

,

programming

Red Dawn 2019: Reinstate 八一

Given the current state of things¹, it might be worth considering a re-release of 'Red Dawn (2012)', containing the original People's Republic of China story-line and imagery. It might not make the film any better, but at least the story and scenery would make a modicum more sense. Either-way, it would be pretty cool to see Chinese and Russian military on American streets, even in such a silly film as this.

¹ https://www.reuters.com/article/us-un-nuclear/risk-of-nuclear-war-now-highest-since-ww2-un-arms-research-chief-says-idUSKCN1SR24H

Below is a small list of [some defunct] URLs relating to the original version of film. There are images and texts about the original making of the film, as well as reviews and concerns about its release (circa 2010).

film info: https://en.wikipedia.org/wiki/Red_Dawn_(2012_film)

official website: http://reddawn2010.com/

images of filming in Detroit: http://detroitfunk.com/red-dawn/

'Russians' turn up on set: http://reddawn2010.com/index.php?option=com_content&view=article&id=88:those-russians-just-cant-stay-out

concept art: http://www.productionillustration.com/gallery/red-dawn/

Some posters used in the film: http://hiro-tan.org/~ekoontz/red_dawn/

Review of the original version: http://www.libertasfilmmagazine.com/exclusive-libertas-sees-the-uncensored-version-of-mgms-new-red-dawn/

Anti-film preview: https://www.theawl.com/2010/05/real-america-red-dawn-remade-china-is-coming-for-our-children/

Anti-Film website: http://www.anti-reddawn2010.com/

Red Dawn News Twitter: https://twitter.com/reddawnnews

Forum thread discussing film changes: https://www.alternatehistory.com/forum/threads/red-dawn-remake-finally-coming-out.213393/

Some other images relating to the film in its current state:

A poor attempt at repainting one of the PLA stars to one representing the KPA.

A scene in the film where the symbol on a sign rapidly changes back and forth.

[see full video: https://drive.google.com/open?id=1JYxl5qNOCYY7HUoUkPzeblFcO07ueg-f]

The average colour of each frame in the film.

[explanation: https://oioiiooixiii.blogspot.com/2018/09/ffmpeg-fapa-frame-averaged-pixel-array.html]

tags:

China

,

Cold War

,

movies

,

pixel array

,

propaganda

,

Russia

,

USA

,

war

,

Россия

Subscribe to:

Posts

(

Atom

)